An internal web application built by Libraries faculty and staff leverages computer vision to improve the discoverability of archival photos by allowing archivists to quickly find groups of images depicting similar subjects and add descriptive metadata tags in bulk.

Computer-Aided Metadata generation for Photo archives Initiative (CAMPI), was inspired by a request from the CMU Marketing and Communications team, which regularly works with the University Archives to source images for online and print materials.

A MarCom staff member contacted the Archives in June to request images for an email to incoming students. They were seeking early images of the Computation Center, which housed the University's first computer – an IBM 650 that arrived in 1956. While the Archives has a number of photos identified as the Computation Center, the earliest were from the late 1960s.

'We know, from experience, that our photo collection is not fully inventoried, and there are images with incorrect descriptions,' said University Archivist Julia Corrin. 'I was interested in a tool that would let me to see if I could identify any earlier photos of the Computation Center space or find other images that were improperly labeled.'

The University Archives has approximately one million photos in its collection, 20,000 of which are digitized. That number grows larger each year as new materials are added. Growing at this rate makes it impossible for archivists to individually describe and categorize each picture – a necessary step to make them searchable by the public – so labels often lack specificity. There are so many images tagged with 'Computers,' 'Computing,' and 'Students in Lab' that the archivists do not often have time to sort through the many images with generic tags. They focus on images with more specific labels, such as "Computation Center," which can mean that other, perhaps better, images are never identified.

CAMPI allows the archivists to do this at scale by using computer vision, a term that refers to software that performs visual tasks with images, such as clustering together similar photographs, assigning photographs to predefined categories, and identifying objects and faces in photographs.

The app was developed between May and September 2020 by in-house by a team that included University Archivist Julia Corrin, Digital Humanities Developer Matt Lincoln, Project Archivist Emily Davis, Digital Humanities Program Director Scott Weingart, Metadata Specialist Angelina Spotts, and Scanner Operator Jon McIntire.

'The CMU General Photography Collection was a great candidate for this project because it is in high demand, yet has so little metadata,' said Lincoln. 'Even a partly successful project would greatly improve the collection metadata, and could provide a possible solution for metadata generation if the archives were ever funded to digitize the entire collection.'

Instead of training a new computer vision to categorize the images automatically, the project team focused their efforts on designing a responsive user interface that would let archivists leverage visual similarity searching with their existing metadata about how the collection was organized.

For example, using the web app, an archivist trying to tag pictures of 'students in class' could begin tagging with a clearly labled photo of a class in a lecture hall in 1963, which would pull up other pictures taken from similar angles of students facing lecturers in many different kinds of rooms across campus, across many different years. Because the software also stored information about which roll of film the photo originally came from, archivists could quickly navigate from one of these computer-vision-supplied photographs to look at the rest of that image's roll, quickly adding the 'students in class' tag to other shots from the same session that might have been missed by the computer. At the end of this process, the archivists would return to the visual similarity results to find the next roll of film to prioritize for consideration.

This approach combined the best of both worlds: utilizing the existing structure of the photo collection to make tagging efficient and context-sensitive, while applying visual search to nimbly navigate across folders and boxes of the collection and surface potential photos for tagging that archivists might have otherwise missed.

When deployed for the Computation Center search, the app used images already identified as the center as 'seed' images. These seed images pulled up other similar images–typically of rooms housing early computer equipment. While it was unable to find an image dating back to 1956, the tool did unearth a number of earlier photos of the space that had incomplete labels.

Through the use of CAMPI, the archivists were able to quickly identify the best images of the Computation Center from a larger set. Before CAMPI, the traditional review process for photographs would consist of opening each scanned image separately and comparing them to identify the best option. CAMPI's ability to pull images together from multiple 'jobs' (a term inherited from the original photographers–each 'job' is typically a single roll of 35mm film with an individual job code and label) in an interface that allows the user to quickly inspect options speeds up the immeasurably. The use of CAMPI on historical photographs also highlighted the need for computer vision similarity models that work better with and black and white images.

'This project suggests new ways for us–and our users–to look at and identify images of interest,' said Corrin. 'You no longer need to know exactly what you're looking for to uncover it–there is more room for exploration. If this were to be put into full production, it would give us the chance to surface and use images that haven't been seen in 50 years or longer by enabling us to appraise and describe parts of our image collections with new knowledge and efficiency.'

For now, CAMPI is just a prototype. While the first exploratory project is over, data from the tagging and deduplication work done during this project will be used as the photographs are migrated to a new digital collections system that will make them publicly accessible. A white paper explaining the project in greater depth that is now available includes a high-level technical architecture that discusses how such a system would connect to existing collection catalogues and image databases that libraries and archives already use.

'Libraries and archives around the world have been actively exploring how they can use computer vision to enhance their collections data to make it more accessible and searchable by the public,' said Lincoln. "CAMPI's contribution to the field is a focus on the archivist experience. The user interfaces more seamlessly connect computer vision technology with existing archival organization while keeping the human experts ‘in the loop.''

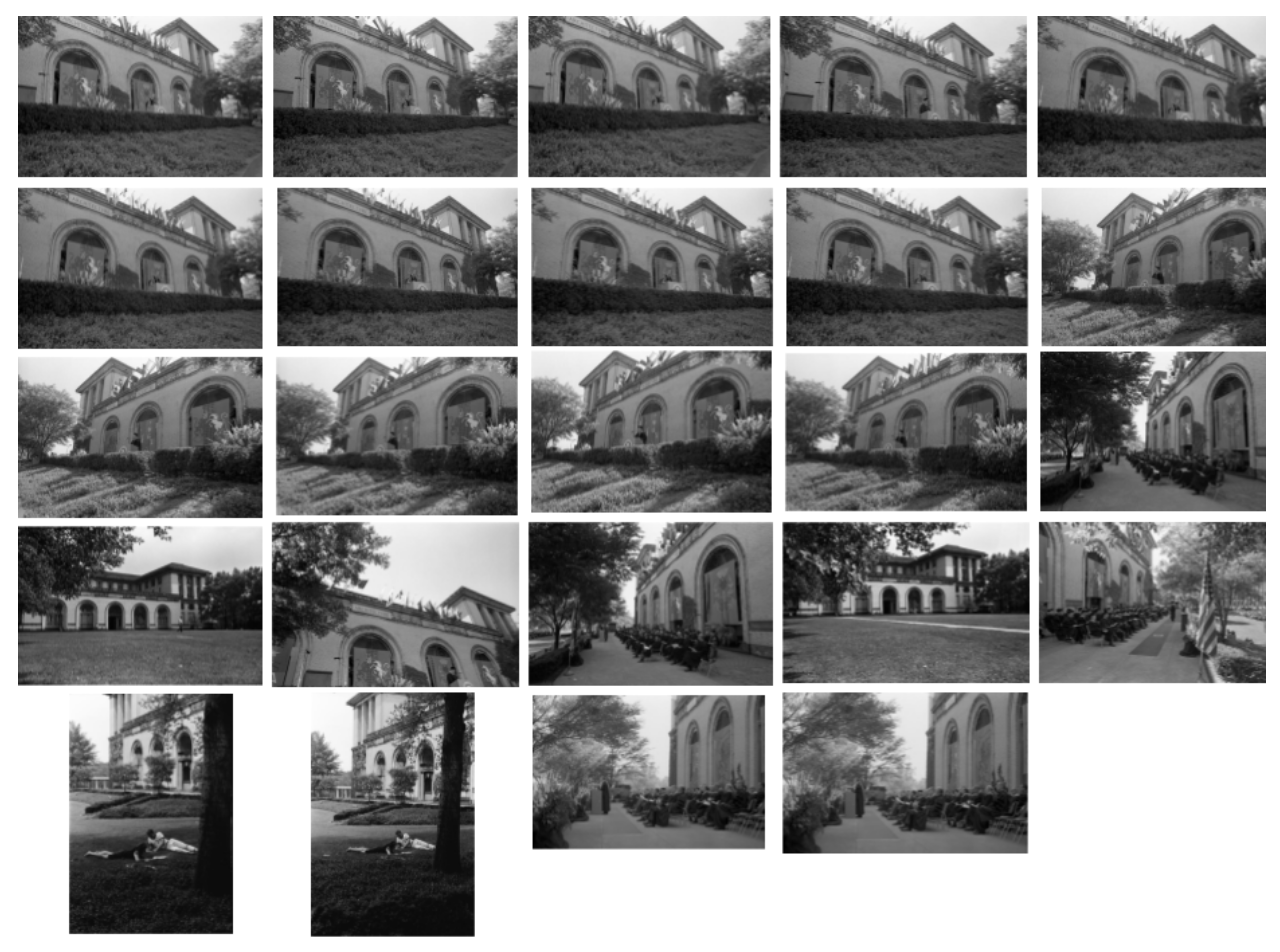

Image caption: A montage of the resulting photographs from a search for the College of Fine Arts building. Similarity search proved particularly effective at clustering buildings together—all these shots show different views of the College of Fine Arts, with many of the images coming from different parts of the collection outside the original job.